Penmachine

23 April 2010

What Darwin didn't get wrong

Last October I reviewed three books about evolution: Neil Shubin's Your Inner Fish, Jerry Coyne's Why Evolution is True, and Richard Dawkins's The Greatest Show on Earth: The Evidence for Evolution. It was a long review, but pretty good, I think.

There's another long multi-book review just published too. This one's written by the above-mentioned Jerry Coyne (who will be in Vancouver for a talk on fruit flies this weekend), and it covers both Dawkins's book and a newer one, What Darwin Got Wrong, by Jerry Fodor and Massimo Piattelli-Palmarini, which has been getting some press.

Darwin got a lot of things wrong, of course. There were a lot of things he didn't know, and couldn't know, about Earth and life on it—how old the planet actually is (4.6 billion years), that the continents move, that genes exist and are made of DNA, the very existence of radioactivity or of the huge varieties of fossils discovered since the mid-19th century.

It took decades to confirm, but Darwin was fundamentally right about evolution by natural selection. Yet that's where Fodor and Piattellii-Palmarini think he was wrong. Dawkins (and Coyne) disagree, siding with Darwin—as well as almost all the biologists working today or over at least the past 80 years (though apparently not Piattellii-Palmarini).

I'd encourage you to read the whole review at The Nation, but to sum up Coyne's (and others') analysis of What Darwin Got Wrong, Fodor (a philosopher) and Piattelli-Palmarini (a molecular biologist and cognitive scientist) seem to base their argument on, of all things, word games. They don't offer religious or contrary scientific arguments, nor do they dispute that evolution happens, just that natural selection, as an idea, is somehow a logical fallacy.

Here's how Coyne tries to digest it:

If you translate [Fodor and Piattelli-Palmarini's core argument] into layman's English, here's what it says: "Since it's impossible to figure out exactly which changes in organisms occur via direct selection and which are byproducts, natural selection can't operate." Clearly, [they] are confusing our ability to understand how a process operates with whether it operates. It's like saying that because we don't understand how gravity works, things don't fall.

I've read some excerpts of the the book, and it also appears to be laden with eumerdification: writing so dense and jargon-filled it seems to be that way to obscure rather than clarify. I suspect Fodor and Piattelli-Palmarini might have been so clever and convoluted in their writing that they even fooled themselves. That's a pity, because on the face of it, their book might have been a valuable exercise, but instead it looks like a waste of time.

Coyne, by the way, really likes Dawkins's book, probably more than I did. I certainly think it's a more worthwhile and far more comprehensible read.

Labels: books, controversy, darwin, evolution, review, science

08 April 2010

Another relative

It's quite astonishing how many fossils of extinct human relatives that paleontologists have found in recent years. Just in the past year, I've mentioned Darwinius and Ardipithecus. And this week we hear about Australopithecus sediba, which sheds further light on how ancient apes transitioned to walking upright like we do.

It's quite astonishing how many fossils of extinct human relatives that paleontologists have found in recent years. Just in the past year, I've mentioned Darwinius and Ardipithecus. And this week we hear about Australopithecus sediba, which sheds further light on how ancient apes transitioned to walking upright like we do.

It's a relief that in the latest coverage, the scientists involved have gone out of their way to say that A. sediba is not a "missing link":

"The 'missing link' made sense when we could take the earliest fossils and the latest ones and line them up in a row. It was easy back then," explained Smithsonian Institution paleontologist Richard Potts. But now researchers know there was great diversity of branches in the human family tree rather than a single smooth line.

All of evolution works that way: branching, somewhat messy relationships between organisms, with many extinct species and a few (or perhaps many) survivors. In the case of hominids, we humans are the only bipedal ones left, and we're also by far the most numerous of the surviving lineage, which includes chimpanzees, bonobos, gorillas, and orangutans too. So our evolutionary past can look linear, at least during the past 5 to 7 million years since our ancestral line diverged from the chimp ancestral line, even though it wasn't.

While we have found quite a few fossils of human relatives, that's a very relative term. Until the past few thousand years, there weren't many of us around at all. All the Australopithecus fossils ever found can be outnumbered by the number of trilobite fossils (which are hundreds of millions of years older!) in a single chunk of stone at any rocks-and-gems store. Hominid fossils are still extremely rare things, so we can't reconstruct the branches of our family tree with perfect accuracy. That's because we can't be sure which (if any) of the species we've unearthed were our direct ancestors, and which were our "cousins"—branches of the tree that died out.

Still, we can make attempts with the data we have, some making more assumptions than others, and the options being quite complex. Yet as we find out more, and discover more, our family tree becomes clearer. That's pretty cool.

Labels: africa, evolution, history, science

15 March 2010

Studying for jobs that don't exist yet

After high school, there are any number of specialized programs you can follow that have an obvious result: training as an electrician, construction worker, chef, mechanic, dental hygienist, and so on; law school, medical school, architecture school, teacher college, engineering, library studies, counselling psychology, and other dedicated fields of study at university; and many others.

But I don't think most people who get a high school diploma really know very well what they want to do after that. I certainly didn't. And it's just as well.

At the turn of the 1990s, I spent two years as student-elected representative to the Board of Governors of the University of British Columbia, which let me get to know some fairly high mucky-muck types in B.C., including judges, business tycoons, former politicians, honourees of the Order of Canada, and of course high-ranking academics. One of those was the President of UBC at the time, Dr. David Strangway.

In the early '70s, before becoming an academic administrator, he had been Chief of the Geophysics Branch for NASA during the Apollo missions—he was the guy in charge of the geophysical studies U.S. astronauts performed on the Moon, and the rocks they brought back. And Dr. Strangway told me something important, which I've remembered ever since and have repeated to many people over the past couple of decades.

That is, when he got his physics and biology degree in 1956 (a year before Sputnik), no one seriously thought we'd be going to the Moon. Certainly not within 15 years, or probably anytime within Strangway's career as a geophysicist. So, he said to me, when he was in school, he could not possibly have known what his job would be, because NASA, and the entire human space program, didn't exist yet.

In a much less grandiose and important fashion, my experience proved him right. Here I am writing for the Web (for free in this case), and that's also what I've been doing for a living, more or less, since around 1997. Yet when I got my university degree (in marine biology, by the way) in 1990, the Web hadn't been invented. I saw writing and editing in my future, sure, since it had been—and remains—one of my main hobbies, but how could I know I'd be a web guy when there was no Web?

The best education prepares you for careers and avocations that don't yet exist, and perhaps haven't been conceived by anyone. Because of Dr. Strangway's story, and my own, I've always told people, and advised my daughters, to study what they find interesting, whatever they feel compelled to work hard at. They may not end up in that field—I'm no marine biologist—but they might also be ready for something entirely new.

They might even be the ones to create those new things to start with.

Labels: biology, education, memories, moon, school, science, vancouver, work

06 March 2010

Ida: now just a nice fossil

Remember the crazy hype last year about "Ida," the beautifully preserved 47 million–year–old primate? The one I called a "cool fossil that got turned into a publicity stunt?"

It turns out that, yes, the original authors seem to have rushed their paper about Ida into publication, presumably in order to meet a deadline for a TV special. And even by the loosest definition of the term, Ida is no "missing link" whatsoever, and not closely related to humans. (Not that relatedness to humans is what should make a fossil important, mind you.)

So now, like Ardi, who's ten times younger, Ida is what it deserves to be: a fascinating set of remains from which we can learn many things, but not anything that fundamentally revolutionizes our understanding of primate evolution. And that's a good thing.

Labels: africa, controversy, evolution, history, science, television

14 January 2010

Quake risks

Five years ago I wrote a long series of posts about the Indian Ocean earthquake and tsunami, compiling information from around the Web and using my training in marine biology and oceanography to help explain what happened. Nearly 230,000 people died in that event.

Five years ago I wrote a long series of posts about the Indian Ocean earthquake and tsunami, compiling information from around the Web and using my training in marine biology and oceanography to help explain what happened. Nearly 230,000 people died in that event.

Tuesday's magnitude-7 quake in Haiti looks to be a catastrophe of similar scale. I first learned of it through Twitter, which seems to be a key breaking-news technology now. Hearing that it was 7.0 on the Richter scale and was centred on land, only 25 kilometres from Port-au-Prince, I immediately thought, "Oh man, this is bad."

And it is. Some 50,000 are dead already, and more will die among the hundreds of thousands injured or missing. Haiti is, of course, one of the world's poorest countries, which makes things worse. Learning from aid efforts around the Indian Ocean in 2005 and from other disasters, the Canadian government is offering to match donations from Canadians for relief in Haiti.

This is a reminder that we live on a shifting, active planet, one with no opinions or cares about us creatures who cling like a film on its thin surface. We have learned, recently, to forecast weather, and to know where dangers from earthquakes, volcanoes, floods, storms, and other natural risks might lie. But we cannot predict them precisely, and some of the places people most like to live—flat river valleys, rich volcanic soils, fault-riddled landscapes, monsoon coastlines, tornado-prone plains, steep hillsides—are also dangerous.

Worse yet, the danger may not express itself over one or two or three human lifetimes. My city of Vancouver is in an earthquake zone, and also sits not far from at least a couple of substantial volcanoes. Yet it has been a city for less than 125 years. Quakes and eruptions happen in this region all the time—on a geological timescale. That still means that there has been no large earthquake or volcanic activity here since before Europeans arrived.

We would, I hope, do better than Port-au-Prince in a similar earthquake, but such chaos is not purely a problem of the developing world. The Earth, nature, and the Universe don't take any of our needs into account (no matter what foolishness people like Pat Robertson might say). We are at risk all over the world, and when the worst happens, we need to help each other.

Labels: americas, disaster, probability, science

24 November 2009

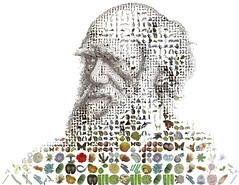

"The Origin" at 150

I wrote about it in much more detail back in February, but today is the actual 150th anniversary of Charles Darwin's On the Origin of Species, which was first published on November 24, 1859. That was more than two decades after Darwin first formulated his ideas about evolution by natural selection.

I wrote about it in much more detail back in February, but today is the actual 150th anniversary of Charles Darwin's On the Origin of Species, which was first published on November 24, 1859. That was more than two decades after Darwin first formulated his ideas about evolution by natural selection.

Some have called The Origin the most important book ever written, though of course many would dispute that. It's certainly up there on the list, and unequivocally on top for the field of biology. Darwin, along with others like Galileo, radically changed our perceptions about our place in the universe.

But Darwin was a scientist, not an inventor: he discovered natural selection, but did not create it. We honour him for being smart and tenacious, for being the first to figure out the basic mechanism that generated the history of life, and for writing eloquently and persuasively about it. His big idea was right (even if it took more than 70 years to confirm), but some of his conjectures and mechanisms turned out to be wrong.

He was also, from all accounts, an exceedingly nice man. Among towering intellects and important personalities, that's pretty unusual too.

Labels: anniversary, books, controversy, evolution, science

20 November 2009

See the world

Since 1972, the Landsat satellites have been photographing the surface of the Earth from space. However, the amount of data involved meant that only recently could researchers start assembling the millions of images into an actual map, where they could all be viewed as a mosaic.

Since 1972, the Landsat satellites have been photographing the surface of the Earth from space. However, the amount of data involved meant that only recently could researchers start assembling the millions of images into an actual map, where they could all be viewed as a mosaic.

NASA has posted an article explaining the process. The map covers only land areas (including islands), but it is not static—it includes data from different years so you can see changes in land use and climate.

Here's the neat thing. Back in the 1980s, while the information was there, putting even a single year's images into a map would have cost you at least $36 million (USD). Now you can get the whole thing online for free. Take a look. If you poke around, there's a lot more info than in Google Earth.

Labels: astronomy, geekery, photography, science, space

03 November 2009

Scary stuff, kiddies

For a long time (maybe a couple of years now), I've been having an on-and-off discussion with a friend via Facebook about God and atheism, evolution and intelligent design, and similar topics. He's a committed Christian, just as I'm a convinced atheist. While neither of us has changed the other's mind, the exchange has certainly got each of us thinking.

For a long time (maybe a couple of years now), I've been having an on-and-off discussion with a friend via Facebook about God and atheism, evolution and intelligent design, and similar topics. He's a committed Christian, just as I'm a convinced atheist. While neither of us has changed the other's mind, the exchange has certainly got each of us thinking.

Something that came up for me yesterday when I was writing to him was a question I sort of asked myself: what elements of current scientific knowledge make me uncomfortable? I try not to be someone who rejects ideas solely because they contradict my philosophy. I don't, for instance, think that there are Things We Are Not Meant to Know. However, like anyone, I'm more likely to enjoy a teardown of things I disagree with. Similarly, there are things that seem to be true that part of me hopes are not.

Don't be afraid of the dark

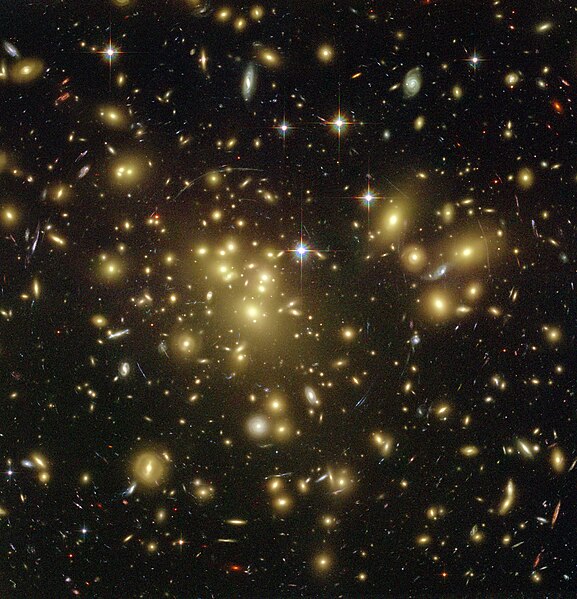

Recent discoveries in astrophysics and cosmology are a good example. Over the past couple of decades, improved observations of the distant universe have turned up a lot of evidence for dark matter and (more recently) dark energy. Those are so-far hypothetical constructs physicists have developed to explain why, for instance, galaxies rotate the way they do and the universe looks to be expanding faster than it should be. No one knows what dark matter and dark energy might actually be—they're not like any matter or energy we understand today.

But we can measure them, and they make up the vast majority of the gravitational influence visible in the universe—96% of it. So the kinds of matter and energy we're familiar with seem to compose only 4% of what exists. The rest is so bizarre that Nova host and physicist Neil deGrasse Tyson has said:

We call it dark energy but we could just as easily have called it Fred. The same is true of dark matter; 85 percent of all the gravity we measure in the universe is traceable to a substance about which we know nothing. We can call that Wilma, right? So one day we'll know what Fred and Wilma are but right now we measure the distance and those are the placeholder terms we give them.

We've been here before. Observations about the speed of light in the late 1800s contradicted some of the fundamental ideas about absolute space and time in Newtonian physics. Analysis yielded a set of new and different theories about space, time, and gravity in the early years of the 20th century. The guy who figured most of it out was Albert Einstein with his theories of relativity. It took a few more years for experiment and observation to confirm his ideas. Other theorists extended the implications into relativity's sister field of quantum mechanics—although there are still ways that general relativity and quantum mechanics don't quite square up with each other.

And if that seems obscure, keep in mind how much of the technology of our modern world—from lasers, transistors, and digital computers to GPS satellite systems and the Web you're reading this on—wouldn't work if relativity and quantum mechanics weren't true. Indeed, the very chemistry of our bodies depends on the quantum behaviour of electrons in the molecules that make us up. Modern physics has strange implications for causality and the nature of time, which make many people uncomfortable. But there's no rule that reality has to be comfortable.

Bummer, man

To the extent that I understand them, I've come to accept the fuzzy, probabilistic nature of reality at quantum scales, and the bent nature of spacetime at relativistic ones. Dark matter and energy are even pretty cool as concepts: most of the composition of the universe is still something we have only learned the very first things about.

But dark energy in particular still gives me the heebie-jeebies. That's because the reason physicists think it exists is that the universe is not only expanding, but expanding faster all the time. Dark energy, whatever it is, is pushing the universe apart.

Which means that, billions of years from now, that expansion won't slow down or reverse, as I learned it might when I was a kid watching Carl Sagan on Cosmos. Rather, it seems inevitable that, trillions of years from now, galaxies will spread so far apart that they are no longer detectable to each other, and then the stars will die, and then the black holes will evaporate, and the universe will enter a permanent state of heat death. (For a detailed description, the later chapters of Phil Plait's Death From the Skies! do a great job.)

To understate it rather profoundly, that seems like a bummer. I wish it weren't so. Sure, it's irrelevant to any of us, or to any life that has ever existed or will ever exist on any time scale we can understand. But to know that the universe is finite, with a definite end where entropy wins, bothers me. But as I said, reality has no reason to be comforting.

Either dark energy and dark matter are real, or current theories of cosmology and physics more generally are deeply, deeply wrong. Most likely the theories are largely right, if incomplete; dark matter and energy are real; and we will eventually determine what they are. There's a bit of comfort in that.

Labels: astronomy, death, science, time

28 October 2009

Evolution book review: Dawkins's "Greatest Show on Earth," Coyne's "Why Evolution is True," and Shubin's "Your Inner Fish"

Next month, it will be exactly 150 years since Charles Darwin published On the Origin of Species in 1859. This year also marked what would have been his 200th birthday. Unsurprisingly, there are a lot of new books and movies and TV shows and websites about Darwin and his most important book this year.

Next month, it will be exactly 150 years since Charles Darwin published On the Origin of Species in 1859. This year also marked what would have been his 200th birthday. Unsurprisingly, there are a lot of new books and movies and TV shows and websites about Darwin and his most important book this year.

Of the books, I've bought and read the three of the highest-profile ones: Neil Shubin's Your Inner Fish (actually published in 2008), Jerry Coyne's Why Evolution is True, and Richard Dawkins's The Greatest Show on Earth: The Evidence for Evolution. They're all written by working (or, in Dawkins's case, officially retired) evolutionary biologists, but are aimed at a general audience, and tell compelling stories of what we know about the history of life on Earth and our part in it. I also re-read my copy of the first edition of the Origin itself, as well as legendary biologist Ernst Mayr's 2002 What Evolution Is, a few months ago.

Why now?

Aside from the Darwin anniversaries, I wanted to read the three new books because a lot has changed in the study of evolution since I finished my own biology degree in 1990. Or, I should say, not much has changed, but we sure know a lot more than we did even 20 years ago. As with any strong scientific idea, evidence continues accumulating to reinforce and refine it. When I graduated, for instance:

- DNA sequencing was rudimentary and horrifically expensive, and the idea of compiling data on an organism's entire genome was pretty much a fantasy. Now it's almost easy, and scientists are able to compare gene sequences to help determine (or confirm) how different groups of plants and animals are related to each other.

- Our understanding of our relationship with chimpanzees and our extinct mutual relatives (including Australopithecus, Paranthropus, Sahelanthropus, Orrorin, Ardipithecus, Kenyanthropus, and other species of Homo in Africa) was far less developed. With more fossils and more analysis, we know that our ancestors walked upright long before their brains got big, and that raises a host of new and interesting questions.

- The first satellite in the Global Positioning System had just been launched, so it was not yet easily possible to monitor continental drift and other evolution-influencing geological activities happening in real time (though of course it was well accepted from other evidence). Now, whether it's measuring how far the crust shifted during earthquakes or watching as San Francisco marches slowly northward, plate tectonics is as real as watching trees grow.

- Dr. Richard Lenski and his team had just begun what would become a decades-long study of bacteria, which eventually (and beautifully) showed the microorganisms evolving new biochemical pathways in a lab over tens of thousands of generations. That's substantial evolution occurring by natural selection, incontrovertibly, before our eyes.

- In Canada, the crash of Atlantic cod stocks and controversies over salmon farming in the Pacific hadn't yet happened, so the delicate balances of marine ecosystems weren't much in the public eye. Now we understand that human pressures can disrupt even apparently inexhaustible ocean resources, while impelling fish and their parasites to evolve new reproductive and growth strategies in response.

- Antibiotic resistance (where bacteria in the wild evolve ways to prevent drugs from being as effective as they used to) was on few people's intellectual radar, since it didn't start to become a serious problem in hospitals and other healthcare environments until the 1990s. As with cod, we humans have unwittingly created selection pressures on other organisms that work to our own detriment.

...and so on. Perhaps most shocking in hindsight, back in 1990 religious fundamentalism of all stripes seemed to be on the wane in many places around the world. By association, creationism and similar world views that ignore or deny that biological evolution even happens seemed less and less important.

Or maybe it just looked that way to me as I stepped out of the halls of UBC's biology buildings. After all, whether studying behavioural ecology, human medicine, cell physiology, or agriculture, no one there could get anything substantial done without knowledge of evolution and natural selection as the foundations of everything else.

Why these books?

The books by Shubin, Coyne, and Dawkins are not only welcome and useful in 2009, they are necessary. Because unlike in other scientific fields—where even people who don't really understand the nature of electrons or fluid dynamics or organic chemistry still accept that electrical appliances work when you turn them on, still fly in planes and ride ferryboats, and still take synthesized medicines to treat diseases or relieve pain—there are many, many people who don't think evolution is true.

No physicians must write books reiterating that, yes, bacteria and viruses are what spread infectious diseases. No physicists have to re-establish to the public that, honestly, electromagnetism is real. No psychiatrists are compelled to prove that, indeed, chemicals interacting with our brain tissues can alter our senses and emotions. No meteorologists need argue that, really, weather patterns are driven by energy from the Sun. Those things seem obvious and established now. We can move on.

But biologists continue to encounter resistance to the idea that differences in how living organisms survive and reproduce are enough to build all of life's complexity—over hundreds of millions of years, without any pre-existing plan or coordinating intelligence. But that's what happened, and we know it as well as we know anything.

If the Bible or the Qu'ran is your only book, I doubt much will change your mind on that. But many of the rest of those who don't accept evolution by natural selection, or who are simply unsure of it, may have been taught poorly about it back in school—or if not, they might have forgotten the elegant simplicity of the concept. Not to mention the huge truckloads of evidence to support evolutionary theory, which is as overwhelming (if not more so) and more immediate than the also-substantial evidence for our theories about gravity, weather forecasting, germs and disease, quantum mechanics, cognitive psychology, or macroeconomics.

Enjoying the human story

So, if these three biologists have taken on the task of explaining why we know evolution happened, and why natural selection is the best mechanism to explain it, how well do they do the job? Very well, but also differently. The titles tell you.

Shubin's Your Inner Fish is the shortest, the most personal, and the most fun. Dawkins's The Greatest Show on Earth is, well, the showiest, the biggest, and the most wide-ranging. And Coyne's Why Evolution is True is the most straightforward and cohesive argument for evolutionary biology as a whole—if you're going to read just one, it's your best choice.

However, of the three, I think I enjoyed Your Inner Fish the most. Author Neil Shubin was one of the lead researchers in the discovery and analysis of Tiktaalik, a fossil "fishapod" found on Ellesmere Island here in Canada in 2004. It is yet another demonstration of the predictive power of evolutionary theory: knowing that there were lobe-finned fossil fish about 380 million years ago, and obviously four-legged land dwelling amphibian-like vertebrates 15 million years later, Shubin and his colleagues proposed that an intermediate form or forms might exist in rocks of intermediate age.

Ellesmere Island is a long way from most places, but it has surface rocks about 375 million years old, so Shubin and his crew spent a bunch of money to travel there. And sure enough, there they found the fossil of Tiktaalik, with its wrists, neck, and lungs like a land animal, and gills and scales like a fish. (Yes, it had both lungs and gills.) Shubin uses that discovery to take a voyage through the history of vertebrate anatomy, showing how gill slits from fish evolved over tens of millions of years into the tiny bones in our inner ear that let us hear and keep us balanced.

Since we're interested in ourselves, he maintains a focus on how our bodies relate to those of our ancestors, including tracing the evolution of our teeth and sense of smell, even the whole plan of our bodies. He discusses why the way sharks were built hundreds of millions of years ago led to human males getting certain types of hernias today. And he explains why, as a fish paleontologist, he was surprisingly qualified to teach an introductory human anatomy dissection course to a bunch of medical students—because knowing about our "inner fish" tells us a lot about why our bodies are this way.

Telling a bigger tale

Richard Dawkins and Jerry Coyne tell much bigger stories. Where Shubin's book is about how we people are related to other creatures past and present, the other two seek to explain how all living things on Earth relate to each other, to describe the mechanism of how they came to the relationships they have now, and, more pointedly, to refute the claims of people who don't think those first two points are true.

Dawkins best expresses the frustration of scientists with evolution-deniers and their inevitable religious motivations, as you would expect from the world's foremost atheist. He begins The Greatest Show on Earth with a comparison. Imagine, he writes, you were a professor of history specializing in the Roman Empire, but you had to spend a good chunk of your time battling the claims of people who said ancient Rome and the Romans didn't even exist. This despite those pesky giant ruins modern Romans have had to build their roads around, and languages such as Italian, Spanish, Portuguese, French, German, and English that are obviously derived from Latin, not to mention the libraries and museums and countrysides full of further evidence.

He also explains, better than anyone I've ever read, why various ways of determining the ages of very old things work. If you've ever wondered how we know when a fossil is 65 million years old, or 500 million years old, or how carbon dating works, or how amazingly well different dating methods (tree ring information, radioactive decay products, sedimentary rock layers) agree with one another, read his chapter 4 and you'll get it.

Alas, while there's a lot of wonderful information in The Greatest Show on Earth, and many fascinating photos and diagrams, Dawkins could have used some stronger editing. The overall volume comes across as scattershot, assembled more like a good essay collection than a well-planned argument. Dawkins often takes needlessly long asides into interesting but peripheral topics, and his tone wanders.

Sometimes his writing cuts precisely, like a scalpel; other times, his breezy footnotes suggest a doddering old Oxford prof (well, that is where he's been teaching for decades!) telling tales of the old days in black school robes. I often found myself thinking, Okay, okay, now let's get on with it.

Truth to be found

On the other hand, Jerry Coyne strikes the right balance and uses the right structure. On his blog and in public appearances, Coyne is (like Dawkins) a staunch opponent of religion's influence on public policy and education, and of those who treat religion as immune to strong criticism. But that position hardly appears in Why Evolution is True at all, because Coyne wisely thinks it has no reason to. The evidence for evolution by natural selection stands on its own.

I wish Dawkins had done what Coyne does—noting what the six basic claims of current evolutionary theory are, and describing why real-world evidence overwhelmingly shows them all to be true. Here they are:

- Evolution: species of organisms change, and have changed, over time.

- Gradualism: those changes generally happen slowly, taking at least tens of thousands of years.

- Speciation: populations of organisms not only change, but split into new species from time to time.

- Common ancestry: all living things have a single common ancestor—we are all related.

- Natural selection: evolution is driven by random variations in organisms that are then filtered non-randomly by how well they reproduce.

- Non-selective mechanisms: natural selection isn't the only way organisms evolve, but it is the most important.

The rest of Coyne's book, in essence, fleshes those claims and the evidence out. That's almost it, and that's all it needs to be. He recounts too why, while Charles Darwin got all six of them essentially right back in 1859, only the first three or four were generally accepted (even by scientists) right away. It took the better part of a century for it to be obvious that he was correct about natural selection too, and even more time to establish our shared common ancestry with all plants, animals, and microorganisms.

Better than other books about evolution I've read, Why Evolution is True reveals the relentless series of tests that Darwinism has been subjected to, and survived, as new discoveries were made in astronomy, geology, physics, physiology, chemistry, and other fields of science. Coyne keeps pointing out that it didn't have to be that way. Darwin was wrong about quite a few things, but he could have been wrong about many more, and many more important ones.

If inheritance didn't turn out to be genetic, or further fossil finds showed an uncoordinated mix of forms over time (modern mammals and trilobites together, for instance), or no mechanism like plate tectonics explained fossil distributions, or various methods of dating disagreed profoundly, or there were no imperfections in organisms to betray their history—well, evolutionary biology could have hit any number of crisis points. But it didn't.

Darwin knew nothing about some of these lines of evidence, but they support his ideas anyway. We have many more new questions now too, but they rest on the fact of evolution, largely the way Darwin figured out that it works.

The questions and the truth

Facts, like life, survive the onslaughts of time. Opponents of evolution by natural selection have always pointed to gaps in our understanding, to the new questions that keep arising, as "flaws." But they are no such thing: gaps in our knowledge tell us where to look next. Conversely, saying that a god or gods, some supernatural agent, must have made life—because we don't yet know exactly how it happened naturally in every detail—is a way of giving up. It says not only that there are things we don't know, but things we can never learn.

Some of us who see the facts of evolution and natural selection, much the way Darwin first described them, prefer not to believe things, but instead to accept them because of supporting scientific evidence. But I do believe something: that the universe is coherent and comprehensible, and that trying to learn more about it is worth doing for its own sake.

In the 150 years since the Origin, people who believed that—who did not want to give up—have been the ones who helped us learn who we, and the other organisms who share our planet, really are. Thousands of researchers across the globe help us learn that, including Dawkins exploring how genes, and ideas, propagate themselves; Coyne peering at Hawaiian fruit flies through microscopes to see how they differ over generations; and Shubin trekking to the Canadian Arctic on the educated guess that a fishapod fossil might lie there.

The writing of all three authors pulses with that kind of enthusiasm—the urge to learn the truth about life on Earth, over more than 3 billion years of its history. We can admit that we will always be somewhat ignorant, and will probably never know everything. Yet we can delight in knowing there will always be more to learn. Such delight, and the fruits of the search so far, are what make these books all good to read during this important anniversary year.

Labels: anniversary, books, controversy, evolution, religion, review, science

22 October 2009

Spin my ribcage

Derived from the same CT scan of my body taken a few weeks ago is this 3D movie, also made with the open-source OsiriX software:

You can clearly see my portacath, which showed up just as well as my ribs. Freaky.

Labels: cancer, chemotherapy, ctscan, geekery, photography, science, software, videogames

20 October 2009

See my cancer

Here, take a look at this extremely cool and scientifically amazing picture:

That's me, via a few slices from my latest CT scan, taken at the end of September 2009. I opened the files provided to me by the B.C. Cancer Agency's Diagnostic Imaging department using the open-source program OsiriX, giving me my first chance to take a first-hand look at my cancer in almost a year. Before that, the I'd only seen my original colon tumour on the flexible sigmoidoscopy camera almost three years ago.

I've circled the biggest lung tumours metastasized from my original colon cancer (which was removed by surgery in mid-2007). You can see the one in my upper left lung and two (one right behind the other) in my lower left lung. There are six more tumours, all smaller, not easily visible in this view. I'm not a radiologist, so I couldn't readily distinguish the smallest ones from regular lung matter and other tissue. Nevertheless, now we can all see what I'm dealing with.

These blobs of cancer have all grown slightly since I started treatment with cediranib in November 2008. To my untrained eye, the view doesn't look that different from the last time I saw my scan in December of that year, which is fairly good as far as I'm concerned. Not as good as if they'd stabilized or shrunk, but better than many other possibilities.

Labels: cancer, chemotherapy, ctscan, geekery, photography, science, software, video

19 October 2009

Links of interest (2009-10-19):

From my Twitter stream:

- My dad had cataract surgery, and now that eye has perfect vision—he no longer needs a corrective lens for it for distance (which, as an amateur astronomer, he likes a lot).

- Darren's Happy Jellyfish (bigger version) is my new desktop picture.

- Ten minutes of mesmerizing super-slo-mo footage of bullets slamming into various substances, with groovy bongo-laden soundtrack.

- SOLD! Sorry if you missed out.

I have a couple of 4th-generation iPod nanos for sale, if you're interested. - Great backgrounder on the 2009 H1N1 flu virus—if you're at all confused about it, give this a read.

- The new Nikon D3s professional digital SLR camera has a high-gain maximum light sensitivity of ISO—102,400. By contrast, when I started taking photos seriously in the 1980s, ISO—1000 film was considered high-speed. The D3s can get the same exposure with 100 times less light, while producing perfectly acceptable, if grainy, results.

- Nice summary of how content-industry paranoia about technology has been wrong for 100 years.

- The Obama Nobel Prize makes perfect sense now.

- I like these funky fabric camera straps (via Ken Rockwell).

- I briefly appear on CBC's "Spark" radio show again this week.

- Here's a gorilla being examined in the same type of CT scan machine I use every couple of months. More amazing, though, is the mummified baby woolly mammoth. Wow.

- As I discovered a few months ago, in Canada you can use iTunes gift cards to buy music, but not iPhone apps. Apple originally claimed that was comply with Canadian regulations, but it seems that's not so—it's just a weird and inexplicable Apple policy. (Gift cards work fine for app purchases in the U.S.A.)

- We've released the 75th episode of Inside Home Recording.

- These signs from The Simpsons are indeed clever, #1 in particular.

- Since I so rarely post cute animal videos, you'd better believe that this one is a doozy (via Douglas Coupland, who I wouldn't expect to post it either).

- If you're a link spammer, Danny Sullivan is quite right to say that you have no manners or morals, and you suck.

- "Lock the Taskbar" reminds me of Joe Cocker, translated.

- A nice long interview with Scott Buckwald, propmaster for Mad Men.

Labels: animals, apple, biology, cartoon, geekery, humour, insidehomerecording, iphone, ipod, itunes, linksofinterest, nikon, photography, politics, science, surgery, television, video, web

02 October 2009

Ardi is another fascinating hominid fossil, but "missing link" no longer makes sense

First, let's get something out of the way: the term missing link has long been useless, especially in human palaeontology. That's reinforced by this week's publication of a special issue of the journal Science, focusing on the 4.4-million-year-old skeleton of Ardipithecus ramidus, nicknamed "Ardi."

First, let's get something out of the way: the term missing link has long been useless, especially in human palaeontology. That's reinforced by this week's publication of a special issue of the journal Science, focusing on the 4.4-million-year-old skeleton of Ardipithecus ramidus, nicknamed "Ardi."

Chimpanzees are the closest living relative to human beings in the world, but our common ancestor lived a long time ago—at least 6 million years, likely more. When Charles Darwin proposed, some 150 years ago, that the fossil links between humans and chimps were likely to be found in Africa, he was right, but at the time those links really were missing.

It took a few decades until the first Homo erectus fossils were found in Asia. Since then, palaeontologists have found many different skeletons and skeletal parts of Homo, Paranthropus, and Australopithecus, with the oldest, as predicted, all in Africa. We've been filling in our side of the chimp-human family tree for over a century. (The chimpanzee lineage has been more difficult, probably because their ancestors' forest habitats are less prone to preserving fossils—and we're less prone to paying as much attention to it too.)

So it's been a long time since the links were missing, and the term missing link is now more misleading than helpful. Like "Ida," the 47-million-year-old primate revealed with too much hype earlier this year, "Ardi" is no missing link, but she is another fascinating fossil showing interesting things about our ancestry. Most notably, she walked upright as we do, but had a much smaller brain, more like other apes—as well as feet that remained better for climbing trees than ours.

None of that should be shocking. We shouldn't expect any common ancestor of chimps and humans to be more like either one of us, since both lineages have kept evolving these past 6 million years. Ardi isn't a common ancestor—she's more closely related to humans than to chimps, and accordingly shows more human characteristics (or, perhaps better, we show characteristics more like hers), like bipedal walking and flexible wrists. That's extremely cool.

Also interestingly, "Ardi" is not new. It's taken researchers more than 15 years to analyze her, and similar fossils found nearby in Ethiopa's Afar desert. Simply teasing the extremely fragile, powdery fossil materials out of the soil without destroying them took years. Science can sometimes be a painstaking process, not for those who lack patience.

Labels: africa, controversy, evolution, history, science

30 September 2009

Blasphemy: funny if it weren't often so dangerous

Today is International Blasphemy Day (of course there are a Facebook page and group). The event is held on the anniversary of the 2005 publication in Denmark of those infamous cartoons of the Prophet Muhammad. Subversive cartoonist Robert Crumb is coincidentally in on the action this year too, with his illustrated version of the Book of Genesis.

Today is International Blasphemy Day (of course there are a Facebook page and group). The event is held on the anniversary of the 2005 publication in Denmark of those infamous cartoons of the Prophet Muhammad. Subversive cartoonist Robert Crumb is coincidentally in on the action this year too, with his illustrated version of the Book of Genesis.

Blasphemy Day isn't aimed merely at Islam or Christianity, but at all and any religions and sects that include the concepts of blasphemy, apostasy, desecration, sacrilege, and the like. "Ideas don't need rights," goes the tagline, "people do."

While my grandfather was a church musician, and my parents had me baptized and took me to services for a short while as a youngster, I've never been religious, so no doubt I blaspheme regularly without even thinking about it. I've written plenty about religion on this blog in the past few years, often blasphemously in someone's opinion, I'm sure. In 2007 I wrote my preferred summary of my attitude:

The beauty of a globular cluster or a diatom, the jagged height of a mountain or the depth of geological time—to me, these are natural miracles, not supernatural ones.

In that same post, I also wrote tangentially about blasphemy:

...given the scope of this universe, and any others that might exist, why would any god or gods be so insecure as to require regulated tributes from us in order to be satisifed with their accomplishments?

If the consequences—imposed by humans against each other, by the way—weren't so serious in so many places, the idea of blasphemy would be very funny. Even if there were a creator (or creators) of the Universe, how could anything so insignificant as a person, or even the whole population of a miniscule planet, possibly insult it?

We're talking about the frickin' Universe here. (Sorry, should be properly blasphemous: the goddamned Universe.) You know, 13.7 billion years old? Billions of galaxies, with billions of stars each? That one? Anything happening here on Earth is, on that scale, entirely irrelevant.

To my mind, there are no deities anyway. But if you believe there are, please consider this: it's silly to think that a god or gods could be emotionally fragile enough to be affected by our thoughts and behaviours, and even sillier to believe that people could or should have any role in enforcing godly rules. Silliest yet is that believers in a particular set of godly rules should enforce those rules on people who don't share the same belief.

Being a good person is worth doing for its own sake, and for the sake of our fellow creatures. Sometimes being good, or even simply being accurate, may require being blasphemous by someone else's standards. Today is a day to remember that.

Labels: controversy, linkbait, politics, religion, science

26 September 2009

Links of interest (2009-09-26):

- Photo sharing site Flickr has these new Gallery things.

- Homemade stratosphere camera rig goes sub-orbital to 93,000 feet (18 miles). Total cost? $150.

- Suw Charman's life is "infested with yetis."

- AIS is the way that commercial ships and boats report their near-coastal positions for navigation. The Live Ships Map uses AIS data to show almost-real-time positions for vessels all around the world. Zoom in and be amazed.

- Strong Bad Email #204 had be laughing uncontrollably. Make sure to click around on the end screen for the easter eggs.

- Julia Child boils up some primordial soup. Really.

- Funky bracelets made from old camera lens housings. Nerdy, yet cool.

- Adobe Photoshop Elements 8: most of the cool features, about 85% cheaper than regular Photoshop.

- Vancouver's awesome and inexpensive Argo Cafe finally gets coverage in the New York Times.

- When people ask me to spell a word out loud, I notice that I scrunch up my face while I visualize the letters behind my eyelids.

- Via Jeff Jarvis: in the future, if politicians have nothing embarrassing on the Net, we'll all wonder what it is they're hiding and why they've spent so much effort expunging it.

Labels: cartoon, evolution, flickr, food, linksofinterest, marine, photography, politics, restaurant, science, software, vancouver, web

21 August 2009

Gnomedex 2009 day 1

My wife Air live blogged the first day's talks here at the 9th annual Gnomedex conference, and you can also watch the live video stream on the website. I posted a bunch of photos. Here are my written impressions.

My wife Air live blogged the first day's talks here at the 9th annual Gnomedex conference, and you can also watch the live video stream on the website. I posted a bunch of photos. Here are my written impressions.

Something feels a little looser, and perhaps a bit more relaxed, about this year's meeting. There's a big turnover in attendees: more new people than usual, more women, and a lot more locals from the Seattle area. More Windows laptops than before, interestingly, and more Nikon cameras with fewer Canons. A sign of tech gadget trends generally? I'm not sure.

As always, the individual presentations roamed all over the map, and some were better than others. For example, Bad Astronomer Dr. Phil Plait's talk about skepticism was fun, but also not anything new for those of us who read his blog. However, it was also great as a perfect precursor to Christine Peterson, who invented the term open source some years ago, but is now focused on life extension, i.e. using various dietary, technological, and other methods to improve health and significantly extend the human lifespan.

- Some stuff Dr. Plait said: "Skepticism is not cynicism." "You ask for the evidence [...] and make sure it's good." "Be willing to drop an idea if it's wrong. Yeah, that's tough." "Scientists screw it up as well." "It sucks to be fooled. You can lose your money. You can lose your life."

- Christine Peterson: "Moving is how you tell your body, I'm not dead yet!" "You see people hitting soccer balls with their heads. Would you do that with your laptop? And that's backed up!" (You might like my friend Bill's reaction on my Facebook page.)

As Lee LeFever quipped on Twitter, "The life extension talk is a great followup to the skepticism talk because it provides so many ideas of which to be skeptical." My thought was, her talk seemed like hard reductionist nerdery focused somewhere it may not apply very well. My perspective may be different because I have cancer; for me, life extension is just living, you know? But I also feel that not everything is an engineering problem.

There were a number of those dichotomies through the day. Some other notes I took today:

- Bre Pettis passed out 3D models "printouts" created with the MakerBot he helped design. "Bonus points for being able to print out your... uh... body... parts." "Oh my god, you should put this brain inside Walt Disney's head!" "What's black ABS plastic good for?" "Printing evil stuff."

- One of the most joyous things you'll ever see is a keen scientist really going off on his or her topic of specialty. Firas Khatib on FoldIt protein folding was one of those. For a given sequence of amino acids, the 3D protein structure with lowest free energy is likely to be its useful shape in biology—and his team made a video game to help people figure out optimum shapes, which in the long run can help cure diseases.

- Todd Friesen is a former search engine and website spammer. He had lots of interesting things today. In the world of white and black search engine optimization (SEO), SPAM = "Sites Positioned Above Mine." For spammers, RSS = "Really Simple Stealing" and thus spam blogs. Major techniques for web spammers: hacking pages, bribing people for access, forum posts and user profiles, comment spam. Pay Per Click = PPC = "Pills, Porn, and Casinos."

- I liked these from the Ignite super-fast presentations: "There are more social media non-gurus than social media gurus. Which means we can take them." On annual reports: "Imagine waiting A YEAR to find out what a company is doing."

We had a great trip down to Seattle via Chuckanut Drive with kk+ and Fierce Kitty. Tonight Air and I are sleeping in the Edgewater Hotel on Seattle's Pier 67, next to the conference venue, and tonight is also the 45th anniversary of the day the Beatles stayed in this same hotel and fished out the window.

Labels: anniversary, band, biology, conferences, geekery, gnomedex, history, music, science, travel

24 July 2009

Splashdown

Today we mark 40 years since the Apollo 11 Command Module Columbia splashed down in the middle of the Pacific Ocean, a few hundred kilometres from the now-closed Johnston Island naval base:

Our first (and sixth last!) trip to the surface of the Moon was over. The seared, beaten Columbia (weighing less than 6,000 kg, and which had remained shiny and pristine until its re-entry into the Earth's atmosphere), with its passengers, was the only part of the titanic Saturn V rocket (3 million kg) to return home after a little over one week away. Every other component had been designed to burn up during launch or return, to stay on the Moon's surface (where those parts remain), to smash into the Moon, or to drift in its own orbit around the Sun.

All three astronauts returned safely, and suffered no ill effects, despite being quarantined for two and a half weeks, until August 10, 1969, when I was six weeks old.

Labels: anniversary, astronomy, holiday, moon, oceans, science, space

21 July 2009

Going home

Just before noon today, Pacific Time, marks exactly 40 years since Neil armstrong and Buzz Aldrin launched their Lunar Module Eagle and left the surface of the Moon, to rendezvous with their colleague Michael Collins in lunar orbit:

They were on their way home to Earth, though it would take a few days to get back here.

Labels: anniversary, astronomy, holiday, moon, science, space

20 July 2009

Today was the day

The beginnings of human calendars are arbitrary. We're using a Christian one right now, though the start date is probably wrong, and the monks who created it didn't assign a Year Zero. Chinese, Mayan, Hindu, Jewish, Muslim, and other calendars begin on different dates.

If we were to decide to start over with a new Year Zero, I think the choice would be easy. The dividing line would be 40 years ago today, what we call July 16, 1969. That's when the first humans—the first creatures from Earth of any kind, since life began here a few billion years ago—walked on another world, our own Moon:

They landed their vehicle, the ungainly Eagle, at 1:17 p.m. Pacific Daylight Time (the time of my post here). Just before 8 PDT, Neil Armstrong put his boot on the soil. That was the moment. All three of the men who went there, Armstrong, Buzz Aldrin, and Michael Collins, are almost 80 now, but they are still alive, like the rest of the relatively small slice of humanity that was here when it happened (I was three weeks old).

If the sky is clear tonight, look up carefully for the Moon: it's just a sliver right now. Although it's our closest neighbour in space, you can cover it up with your thumb. People have been there, and when the Apollo astronauts walked on its dust, they could look up and cover the Earth with a gesture too—the place where everyone except themselves had ever lived and died. Every other achievement, every great undertaking, every pointless war—all fought over something that could be blotted out with a thumb.

Even if it doesn't start a new calendar (not yet), today should at least be a holiday, to commemorate the event, the most amazing and important thing we've ever done. Make it one yourself, and remember. Today was the day.

Labels: anniversary, astronomy, holiday, moon, science, space

16 July 2009

Launch day

Forty years ago today. July 16, 1969, 9:32 a.m. EDT, Launch Complex 39A, Cape Canaveral, Florida. Three nearly hairless apes—human beings in pressure suits—left for the Moon:

They were aboard the world's greatest machine, which put out about 175 million horsepower during launch. Less than three hours later, they exited Earth orbit and were on their way.

Labels: anniversary, astronomy, moon, science, space

28 June 2009

Links of interest 2006-06-21 to 2006-06-27

While I'm on my blog break, more edited versions of my Twitter posts from the past week, newest first:

- My wonderful wife got me a Nikon D90 camera for my 40th birthday this week. I'm thinking of selling my old Nikon D50, still a great camera. Anyone interested? I was thinking around $325. I also have a brand new 18–55 mm lens for sale with it, $150 by itself or $425 together. I have all original boxes, accessories, manuals, software, etc., and I'll throw in a memory card, plus a UV filter for the lens.

- Roger Hawkins's drum track for "When a Man Loves a Woman" (Percy Sledge 1966): tastiest ever? Hardly a fill, no toms, absolutely delicious.

- Thank you thank you thank you to everyone who came to my 40th birthday party—both for your presence and for the presents. Photos from the event, held June 27, three days early for my actual birthday on Tuesday, are now posted (please use tag "penmachinebirthday" if you post some yourself).

- I think Twitter just jumped the shark. In trending topics, Michael Jackson passed Iran, OK, but both passed by Princess Protection Program (new Disney Channel movie)?

- AT&T (and Rogers, presumably) is trying to charge MythBusters' Adam Savage $11,000 USD for some wireless web surfing here in Canada.

- After more than 12 years buying stuf on eBay, here's our first ever item for sale there. Nothing too exciting, but there you go.

- Michael Jackson's death this week made me think of comparisons with Elvis, John Lennon, and Kurt Cobain. Lennon and Cobain still seemed to have some artistic vitality ahead of them. Feel a need for Michael Jackson coverage? Jian Ghomeshi (MP3 file) on CBC in Canada is the only commentator who isn't blathering mindlessly. But as a cancer patient myself, having Farrah Fawcett and Dr. Jerri Nielsen (of South Pole fame) die of it the same day is a bit hard to take.

- Seattle's KCTS 9 (PBS affiliate) showed "The Music Instinct" with Daniel Levitin and Bobby McFerrin. If you like music or are a musician, it's worth watching, even if it's a bit scattershot, packing too much into two hours.

- New rule: when a Republican attacks gay marriage, lets assume he's cheating on his wife (via Jak King).

- The blogs and podcasts I'm affiliated with are now sold on Amazon for its Kindle e-reader device, for $2 USD a month. I know, that's weird, because they're normally free, and are even accessible for free using the Kindle's built-in web browser, so I don't know why people would pay for them—but if you want to, here you go: Penmachine, Inside Home Recording, and Lip Gloss and Laptops. Okay, we're waiting for the money to roll in...

- Great speech by David Schlesinger from Reuters to the International Olympic Committee on not restricting new media at the Olympics (via Jeff Jarvis).

- TV ad: "Restaurant-inspired meals for cats." Um, have they seen what cats bring in from the outdoors?

- I planned to record my last segment for Inside Home Recording #72, but neighbour was power washing right outside the window (in the rain!). Argh.

- You can't trust your eyes: the blue and green are actually the SAME COLOUR.

- Can you use the new SD card slot in current MacBook laptops for Time Machine backups? (You can definitely use it to boot the computer.) Maybe, but not really. SDHC cards max out at 32GB (around $100 USD); the upcoming SDXC will handle more, but none exist in Macs or in the real world yet. Unless you put very little on the MacBook's internal drive, or use System Preferences to exclude all but the most essential stuff from backups, then no, SD cards are not viable for Time Machine.

- Some stats from Sebastian Albrecht's insane thirteen-times-up-the-Grouse Grind climb in one day this week. He burned 14,000+ calories.

- Even though I use RSS extensively, I find myself manually visiting the same 5 blogs (Daring Fireball, Kottke, Darren Barefoot, PZ Myers, and J-Walk) every morning, with most interesting news covered.

- I never get tired of NASA's rocket-cam launch videos.

- Pat Buchanan hosts conference advocating English-only initiatives in the USA. But the sign over the stage is misspelled.

- Who knew the Rolling Stones made an (awesome) jingle for Rice Krispies in the mid-1960s?

- Always scary stuff behind a sentence like, "'He is an expert in every field,' said a church spokeswoman."

- Kodachrome slide film is dead, but Fujichrome Velvia killed it a long time ago. This is just the official last rites.

- My friends Dave K. and Dr. Debbie B. did the Vancouver-to-Seattle bicycle Ride to Conquer Cancer (more than 270 km in two days) last weekend. Congrats and good job!

- My daughter (11) asks on her blog: "if Dad is so internet famous, I mean, Penmachine is popular, then, maybe I am too..."

- Evolution of a photographer (via Scott Bourne).

Labels: amazon, apple, backup, birthday, cancer, film, geekery, linksofinterest, music, news, olympics, photography, politics, religion, science, space, television, twitter

11 June 2009

Going beyond common sense

A few months ago, I posted two quotes about how science works, and why it's effective:

The first principle is that you must not fool yourself—and you are the easiest person to fool. - Richard Feynman

If common sense were a reliable guide, we wouldn't need science in the first place. - Amanda Gefter

Feynman and Gefter sum up what makes science different from many other intellectual pursuits, and why it has so radically changed the human experience over the past few hundred years. Not fooling ourselves turns out to be surprisingly difficult. That's because (to dig up another thing I write about frequently here) our brains aren't built to find the truth. Often, we have to work against our own thinking to do that.

We evolved to get by and reproduce as hunter-gatherer primates on the savannah of Africa, not to follow two or more independent lines of evidence to confirm how fast the universe is expanding. Yet we have figured that out, because scientific thinking is designed to counteract our tendencies to fool ourselves. Sometimes we still do, for awhile, but science also tends to be self-correcting, because it tries to force reality to trump belief.

There's an excellent article in the current issue of the academic journal Evolution: Education and Outreach, titled "Understanding Natural Selection: Essential Concepts and Common Misconceptions" (via PZ Myers). Yes, it's academic and thus (for a web page) pretty long, but there's lots of meat there, and it's written for a general audience. It's worth reading through.

The first part summarizes how natural selection works. The second part asks "why is natural selection so difficult to understand?" After all, it is elegant and logical, and has mountains (literally, in some cases) of evidence behind it, collected and analyzed and correlated and compared and verified over 150 years. However:

Much of the human experience involves overcoming obstacles, achieving goals, and fulfilling needs. Not surprisingly, human psychology includes a powerful bias toward thoughts about the "purpose" or "function" of objects and behaviors [...] the "human function compunction." This bias is particularly strong in children, who are apt to see most of the world in terms of purpose; for example, even suggesting that "rocks are pointy to keep animals from sitting on them".

In other words, one reason it's hard to understand natural selection (or quantum mechanics, or the weather, or geological time) is that we're predisposed to believe that the whole universe is like us.

Indeed, that's often not a bad place to start. Seeing that populations of organisms change over time, early evolutionary theorists proposed that the organisms changed, in effect, because they wanted to, and passed those desired changes on to their offspring. But those ideas had to be discarded when the evidence didn't support them. Similarly, long tradition indicates that many alternative medical therapies might be worth examining, but research shows that most of them don't work.

Intuition and common sense are a good way to find your way through day-to-day life, but they're not especially reliable when trying to figure out how reality works, and thus how to do things that are genuinely new.

Labels: evolution, psychology, science

31 May 2009

Ignore Oprah's health advice, please

Like most TV shows, The Oprah Winfrey Show is entertaining as its first goal. And like most men, I've rarely enjoyed it much—because it's not aimed at me. That's fine.

But when she discusses health topics, Oprah can be dangerous (here's a single-page version of that long article). You have to infer from her show that on matters of health and medical science, Ms. Winfrey herself doesn't think critically, taking quackery just as seriously as, or more seriously than, anything with real evidence behind it. For every segment from Dr. Oz about eating better and getting more exercise, there seem to be several features on snake oil and magical remedies.

Vaccines supposedly causing autism, strange hormone therapy, offbeat cosmetic surgery, odious mystical crap like The Secret—she endorses them all. Yet even when the ones she tries herself don't seem to work for her, she doesn't backtrack or correct herself. And, almost pathologically, she remains obsessed with her weight despite all her other accomplishments.

Obviously, anyone who's taking their health advice solely from Oprah Winfrey, or any other entertainment personality, is making a mistake. However, I'd go further than that. Sure, watch Oprah for the personal life stories, the freakish tales, her homey demeanor, the cool-stuff giveaways if you want. But if she's dispensing health advice, ignore what she has to say. The evidence indicates to me that, while she may occasionally be onto something good, chances are she's promoting something ineffective or hazardous instead. Taking her advice is not worth the risk.

Labels: biology, controversy, media, science, television

28 May 2009

One weird-ass ship

At first, you might think a cruise ship collided with an oil rig and then crashed into part of a highway overpass, but no, it's just the M/V Chikyu (via the Maritime Blog). Here's how the BBC describes it:

At first, you might think a cruise ship collided with an oil rig and then crashed into part of a highway overpass, but no, it's just the M/V Chikyu (via the Maritime Blog). Here's how the BBC describes it:

The idea was simple. Scientists wanted to drill down into the Earth's crust—and even through the crust—to get samples from the key zones 6 or 7 km down where earthquakes and lots of other interesting geological processes begin; but that was impossible with existing ships.

Solution: find six hundred million dollars, and design and build a new one.

The Chikyu was built in Japan, is 210 m long (almost 690 feet, as long as a decent-sized cruise ship), and 130 m from the keel to the top of the drilling rig (about 425 feet, taller than a Saturn V rocket floating upright in the ocean). It has a crew of 150.

The crazy structure on the front is a helicopter landing pad. During construction, the ship was nicknamed "Godzilla-maru." And you know, $600 million is a lot of money, but it's not outrageous considering what this vessel does. It's the same price as only two or three 747 jets, for instance.

Labels: geekery, japan, oceans, science, transportation

23 May 2009

Ida the fossil primate isn't a missing link, but she's become a PR stunt

If you watched the news or read the paper last week, or surfed around the Web, you probably came across one or two or ten breathless news stories about Darwinius masillae (nicknamed "Ida"), a 47 million-year-old fossil primate that was described, over and over again, as a "missing link" in human evolution. It even showed up in the ever-changing Google home page graphic.

If you watched the news or read the paper last week, or surfed around the Web, you probably came across one or two or ten breathless news stories about Darwinius masillae (nicknamed "Ida"), a 47 million-year-old fossil primate that was described, over and over again, as a "missing link" in human evolution. It even showed up in the ever-changing Google home page graphic.

But something in the coverage—many things, really—set off my bullshit detectors. That's because, in years of watching science news, and getting a biology degree, I've learned that the sudden appearance of a story like this (whether a medical miracle cure, a high-energy physics experiment, or a paleontological discovery) indicates that something else is pushing the hype. Most often, there's solid science in there, but the meaning of the study is probably being overplayed, obscured, or misrepresented. And sure enough, that's the case here:

- First of all, it is a wonderful fossil. A very old, essentially complete preserved skeleton and body impression of a juvenile lemur-like primate, which may or may not actually belong to the group of primates that later would include hominids, like us humans. That is super-cool. The fossil also apparently has an interesting history: it was first found over 25 years ago, and kicked around various private collections and museums in more than one piece until quite recently. Only in the past year has it been fully reassembled and analyzed, with the results published this week. That's news.

But, but, but, BUT...

- Darwinius obviously name-checks Charles Darwin. That's grandiose to start with: scientists naming a fossil after Darwin obviously think it's pretty important, and are hyping it up even before anyone else has a chance to evaluate that claim. Yet for precisely that reason, the name feels like a PR stunt to me. Actually, it makes me think of the Disney division that calls its toys Baby Einstein.

- The whole "missing link" business is a crock, whether the publishing scientists actually claim it or not. Evolutionary biology is 150 years old this year—old enough that there aren't any missing links. What I mean is, sure, scientists find new links in the relationships between living organisms all the time. They've been doing that since before Darwin and Wallace first figured out the mechanisms of natural selection.

But the term missing implies that we're still waiting for evidence that organisms evolve, that science still needs something convincing—when we've had overwhelming evidence since Darwin's Origin of Species in 1859 (and before!), while more keeps accumulating all the time. Even aside from all that, there's no indication that Darwinius is a human ancestor. It may be a link to something, and from something, but it's probably not a link from even older primates to us, which is what the news reports are saying. - The first paper about a fossil touted as such a very important, even revolutionary discovery should appear in one of the major global journals, such as Science or Nature, or maybe the Journal of Paleontology or another high-profile publication in the field. Instead, Darwinius is first appearing in PLoS ONE, an interesting but somewhat experimental online journal from the excellent Public Library of Science.

I'm not knocking it, because PLoS ONE is legitimate, and peer-reviewed—indeed, it's doing what many scientists have argued for since the dawn of the Web in the '90s, which is make quality original scientific research available online without the insane subscription fees of traditional journals. But it's also less than three years old. If the Darwinius paper were otherwise unimpeachable, publishing it in PLoS ONE would be a great example of bringing important, leading-edge science into the 21st century of publishing. However, it felt to me instead that it appeared there because it was a fast way to get the paper out for a looming deadline. - Ah, the press conference. It's always suspicious when a scientific discovery is announced at a press conference. When the media event happens simultaneously with, or even before, publication of the formal paper. When experienced science journalists and fellow researchers get no chance to dig into the details before the story goes live to the wires. When there's obviously some other motive keeping the research secret until the Big Reveal.

And that's what it comes down to. It turns out that the U.S. History Channel paid what is surely a lot of money for exclusive access to the research team for a couple of years now, and that the TV special about Darwinius premieres this coming week. What's it called?

Yup, it's called The Link:

Missing link found! An incredible 95 percent complete fossil of a 47-million-year-old human ancestor has been discovered and, after two years of secret study, an international team of scientists has revealed it to the world. The fossil’s remarkable state of preservation allows an unprecedented glimpse into early human evolution.

That entire summary paragraph is crazy hyperbole, or, to put it bluntly, mostly wrong. By contrast, here's what the authors say in their conclusion to the paper itself:

We do not interpret Darwinius as anthropoid, but the adapoid primates it represents deserve more careful comparison with higher primates than they have received in the past.

Translated, that sentence means "we're not saying this fossil belongs to the big group of Old World primates that includes humans, but it's worth looking to see if the group it does belong to might be more closely related to other such primates than everyone previously thought." It's a good, and typically highly qualified, scientific statement. Yet the History Channel page takes the researchers' conclusion (not a human ancestor) and completely mangles it to claim the very opposite (yes a human ancestor)!

It seems that what happened here is that the research team, while (initially at least) working hard to produce a decent paper about an amazing and justifiably important fossil, got sucked into a TV production, rushed their publication to meet a deadline a week before the show is to air, and then let themselves get swept into a media frenzy that has seriously distorted, misrepresented, and even lied about what the fossil really means.

In short, a cool fossil find has turned into a PR stunt for an educationally questionable cable TV special.

Labels: controversy, evolution, linkbait, science, television

19 May 2009

Airliners are modern miracles of science and engineering

We've just returned from a whirlwind trip. My daughters had an extra day off school, a professional day following the Victoria Day long weekend, so we made quick plans to stay in a hotel in the Seattle suburb of Lynnwood, Washington, a couple of hours' drive south of here. But to thread the needle of long weekend border traffic, we crossed our station wagon into the USA on Sunday and returned Tuesday.

We've just returned from a whirlwind trip. My daughters had an extra day off school, a professional day following the Victoria Day long weekend, so we made quick plans to stay in a hotel in the Seattle suburb of Lynnwood, Washington, a couple of hours' drive south of here. But to thread the needle of long weekend border traffic, we crossed our station wagon into the USA on Sunday and returned Tuesday.

I'm not quite sure how we fit all we did into the 54 hours we were away, but it included a number of family firsts. My older daughter is a big fan of shrimp, and has been enticed by endless ads for the Red Lobster chain of restaurants. We have none in Vancouver, so Lynnwood offered the closest location, and despite lingering memories of a 1995 food poisoning incident at a California location on our honeymoon, my wife and I agreed to go. We all enjoyed our meals there Sunday night, without later illness.

That was the least of the newness, though. My wife Air and I have traveled to Greater Seattle many times over the years, separately during our childhoods and together since we started dating, both with our kids and without, for fun and on business, as a destination and on the way elsewhere. Yet somehow neither of us had ever visited the wonderful Woodland Park Zoo, or Lynnwood's famous Olympus Spa, or Boeing's widebody jet factory in Everett. This trip we covered them all: the kids and I hit the zoo, Air visited the spa, and all four of us took the Boeing tour today on the way home.

The zoo impressed me, especially the habitats for the elephants, gorillas, and orangutans, but while it was a much shorter activity, the Boeing tour was something else. If you live in this part of the world (Vancouver, Seattle, Portland, and environs), and you're a geek who likes any sort of complicated technology, or air travel, or simply huge-ass stuff, you must go, especially considering there's nowhere else in the world you can easily see something similar. The Airbus factory in France requires pre-registration months in advance, with all sorts of forms filled out and approvals and so forth. We just drove up to Everett, paid a few bucks each, and half an hour later were on our way in.

Unfortunately, you're prohibited from taking cameras, electronics, food, or even any sort of bag or purse beyond the Future of Flight exhibit hall where the tour begins, so I have no photos of the assembly plant itself. Trust me, though, it is an extraordinary place. A tour bus drove our group the short distance to the structure, which is the most voluminous building in the world. (Our guide told us all of Disneyland would fit inside, with room for parking. Since the plant is over 3,000 feet long, I figured out that the Burj Dubai tower, the world's tallest building, could also lay down comfortably on the factory's floor space.)